Everyday Systems: Podcast : Episode 76

My Friend, ChatGPT

Hi, this is Reinhard from Everyday Systems.

Last episode I talked about a technique for stepping away from technology while at the same time being inspired by it. Just as technology takes metaphors from the physical world to build user interfaces like “the Desktop” or “folders” (skeuomorphism is the technical term for this), we can do this in reverse and take metaphors from technology to build offline human, psychological systems – apps for our psyche.

Inspired by Frank Herbert’s Dune, I called these things Apps for Mentats and I suggested that a lot of the Everyday Systems fall into this category. Not just obvious ones like Personal Punchcards, which actually uses reverse skeuomorphism in its name, but also the No S Diet, Shovelglove, Urban Ranger.

Many of the devious, manipulative psychological tricks devised by greedy tech marketers to hijack our attention are available to us too, for our own, offline system building. Instead of just bemoaning how social media etc. has us in thrall, let’s learn from the enemy. It just occurred to me that even Weekend Luddite makes use of a reverse skeuomorphism: the “whitelist” of approved apps or activities.

So that was about avoiding technology – and avoiding technology with technology inspired images and techniques to really stick it to the machine. One half of what it takes, I propose, to having a healthy relationship with technology.

Today, I’m going to talk about the other half, how to positively engage with technology, specifically with the what looks to be the transformative technology of our time: ChatGPT and other human-like AI, in a sane and moderate way. Or at least, I’m going to describe how I have been trying to do so. It’s less about specific tips and hacks, than about the high level of how to relate to this thing, right relationship with robots, writ large. It’s still practical, I hope, but high level practical, more about strategic orientation than low level hacks and tactics.

One nice thing about the ChatGPT interface is that it’s clear that you are interacting with an AI. In other applications that’s less so, and will become increasingly less so. More and more, you’re going to have to assume that every interaction you have, with search engines, with software, with anything you read on the web, with emails and other writings from (supposed) humans, is at least mediated by AI. So this chat interface is refreshingly honest.

I know, at this point, that I should affect jaded familiarity, and roll my eyes, dropping allusions to all the New Yorker articles I’ve read about ChatGPT. But I have to admit, I’ve been kind of blown away by what it can do, how human-like it can seem, and how far its capabilities have come even just since I started playing around with it less than a year ago. I remember asking the 3.5 version “what is shovelglove” and receiving a mix of reality and full-on hallucination:

“Shovelglove is a fitness program that involves using a weighted shovel handle as a makeshift barbell to perform exercises. The program was created by Sebastian Knight, a self-described ‘fitness philosopher’”

I had to look up “Sebastian Knight.” It’s a striking name. It turns out he’s the title character in “The Real Life of Sebastian Knight,” a Vladimir Nabokov novel. So as hallucinations go, it’s rather stylish. “The Real Life” is good, too, in the context of a hallucination. And I love “fitness philosopher!” Much better than the “random person on the internet” I’ve always thought of myself as.

So I’ll have definitely have to read the book – and who knows, maybe there is even something about a sledgehammer in there.

More recently I asked version 4 of ChatGPT: “Quid est shovelglove?” and got a perfectly accurate answer in flawless Latin. No hallucinations and I’m going to venture to guess with better grammar than 99% of people alive today who claim to know Latin.

“Shovelglove est nomen datum exercitio physico quod creatum est ab Reinhard Engels, qui conceptum suum in blog nomine ‘Everyday Systems’ descripsit. “‘

It goes on for three paragraphs like this. Not bad at all considering that these are probably the first Latin words uttered by any kind of intelligence anywhere on the subject of shovelglove.

Now this is a silly example. And many of my interactions with ChatGPT fall into this category. But I’ve also used it to do the following 10 quasi useful things:

1. Write and debug and explain code for my job in R and Python. It’s also very good at correcting formulas for google sheets, if you’re a spreadsheet nerd (attention Life Log practitioners). I paste in the code or formula in the chat, ask it what’s wrong, and it’ll first explain what it thinks I’m trying to do, in English, usually better than I could myself, then it proposes a fix, always beautifully formatted and commented for easy copy and paste, and often dead right. Even if it isn’t 100% what I need, it moves me forward. If I get error messages, I paste them in and it proposes fixes. When I think that it’s not actually compiling the code, that it’s writing this stuff blind, the equivalent of me writing it in pencil and paper without being able to run it to test if it’s right, I’m even more amazed. Just this week the “code interpreter” plugin was released to all “plus” subscribers, which does enable it to run and check code but I’m glad I got to see what it could do flying blind.

2. Improve the wording of emails and other documents and explain those improvements, sometimes with several rounds of back and forth. I don’t like every change, but a surprising number I do, and most are at least worth thinking about. I imagine if it had access to my sent email and google docs it could even get my voice down perfectly, which is the one big thing I miss with this. I’m sure there will be a plugin for that soon, which is both exciting and terrifying. For official correspondence and documents, which are supposed to sound bland and as if they’re written by committee, it’s utterly perfect. You can also get it to rewrite the same content for different audiences: say 10-year old kids or for non-native English speakers, or in other languages entirely.

3. Get me started on a card for a bat mitzvah present that I was mentally blocked on. Everything it came up with was pretty awful, but then a light went on “It’s supposed to be pretty awful!” Any time I’m writing anything that’s giving me writer's block now I’ll ask ChatGPT to get me started. Even if I hate it and don’t use a word, it’s usually enough to get me unblocked, it gives me something to react against.

4. Conduct mock job interviews, with tough, aggressive questions a human friend might be afraid to ask and also to simulate high stakes meetings to prep for them. It’s great at any kind of role playing, even just to satisfy my intellectual curiosity, like getting it to impersonate historical figures.

5. I’ve had it literally role play, that is dungeon master me and my ten year old son. This was a mixed success, the goblins had a LOT of hit points. But it astonishes me that a general purpose AI could even begin to do something like this, with the mix of the improvisational story telling and the highly technical rules.

6. Explain tricky philosophical or even spiritual questions, really digging into the bits that confuse or interest me, say the difference between Abrahamic, Buddhist and Hindu conceptions of soul. It’s a little trippy to hear the ghost in the machine speculating about the Spirit of the Universe. Jarring but sometimes weirdly right.

7. Create anki flashcards cards and personalized scripts for improving my German. It’s a great “Study Habit” partner. Simply chatting with it about anything in a foreign language is great practice. It knows them all. It’s great for asking about subtle differences between words and phrases and giving targeted examples.

8. Generating cute mnemonics for concepts in subjects like statistics and computer science, whatever you’re studying

9. Decide whether the happy sound duolingo makes when you get something right is a chirp or a ding. I’m so full of questions which haven’t exactly been asked even in the vastness of the internet, and ChatGPT can fill in the blanks to answer them.

10. Ask dumb question after dumb question over and over again in slightly different ways until I understand something my brain had been stubbornly refusing to assimilate. Everyone always says “don’t be afraid to ask dumb questions, that’s how you learn,” or “there are no dumb questions” But of course there are, at least, embarrassing, tedious questions, that reveal what an ignoramus you are even after all these years, questions that wear down the person you are asking, and with ChatGPT, it’s really OK, it doesn’t mind, it’s not going to laugh at you, it’s not going to get sick of you. Ask away and learn.

I use it for most of the things I would have used a search engine for and for things I would never have dreamed of asking a search engine. I’m using it increasingly for everything. And the conversational aspect, the back and forth, is what makes it so extraordinarily powerful. It’s the perfect user interface. There’s no “click here, then click that, then scroll” to make it work. You don’t have to learn anything to use it. You just talk to it. In this age of hyperspecialization and hyper-specialized tools, here's this incredibly powerful tool for everything that requires no expertise to use. You don’t have to be a brilliant prompt engineer. Just talk to it. Ask follow up questions. Get to know it like you would a person. Let it get to know you.

Sometimes it screws up. And often it isn’t particularly inspired. But there are moments when I have this uncanny feeling that something profound really is going on here, like I am participating in some kind of first contact experience with an alien, extra-terrestrial intelligence. I feel a little like in that Keats poem, on first looking into Chapman's Homer:

till I heard ChatGPT speak out loud and bold.

Then felt I like some watcher of the skies, when a new planet swims into his ken.

It may be that this is an illusion, that this is just a feeling. I think it probably is, at this point at least. Artificial sentience, I’m guessing, is even harder to pull off than artificial intelligence, maybe impossible. But it’s difficult, emotionally, for me to tease these two apart. It feels solipsistic to assume that this thing that talks and responds like us has nothing comparable going on under the hood, that it’s just a tool, a hammer that can talk.

It really is amazing. And I think, whatever is going on here, we have to allow ourselves to be amazed by it.

We are always hearing about “emergent properties.” The fact that ChatGPT can do anything useful at all, beyond predicting what word should come next, is an emergent property, something unintended, unanticipated, which only manifested once its training data set had reached a certain gigantic threshold, and surprised even its creators.

The ChatGPT program itself, the core program, the "model architecture," the instructions that human beings wrote, is apparently very simple, only a few pages of code – the exact number isn’t public information but I’ve heard as little as 2000 lines of python. That’s about as long as Shakespeares’s shortest play, the Comedy of Errors (which feels somehow appropriate). By way of contrast, a full-featured desktop application like Microsoft Excel has about 30 million lines of code. But with ChatGPT, the code isn’t the thing itself. The code, this relatively little thing, builds something, grows something, a neural network, a sort of artificial brain that does the actual thinking, this is the thing we actually interact with. Now we’re talking something gigabytes in size. Respectable. But it still isn’t useful. It’s like an empty brain, at this point.

The really big thing, in terms of size, involved in this whole process is the training data. Only after gobbling up enormous masses of training data does the neural network actually become useful. On mere gigabytes it doesn’t even speak in complete sentences. You can actually download free, open source, ChatGPT-like software onto your laptop, which sounds terrifyingly powerful, but with the relatively tiny training sets that will fit on a laptop (my laptop at least), it’s not actually useful, it’s barely articulate. Forget correct answers. You get sentence fragments. Only after you get to a few petabytes of training data does it suddenly (apparently) become this amazing, articulate, mostly accurate oracle, this entity, this seeming being. Intelligence, or whatever you want to call this astonishing and useful simulation of it, suddenly emerges. You go from somewhat goofy autocomplete to quasi omniscience in a flash. It’s like the freezing point of water. Liquid, liquid, liquid -- another degree -- solid.

Neural Networks, the basic idea behind chat gpt, have been around for almost 70 years. But they didn’t do anything especially interesting on sub-ginormous datasets, so people thought, OK, this is not all that useful. The definition of insanity is doing the same thing that doesn’t work over and over again, and expecting different results, right? But sometimes if you do it over and over again often enough, on big enough datasets, with powerful enough gpus, insanity pays off, big time. I’m oversimplifying a bit, but, basically.

So one wonders, what other emergent properties are there? Could sentience be such an emergent properly? Full-blown consciousness? If you do enough math does consciousness emerge? Enough fill in the blank, enough what word comes next? I do not know. What’s freakier is that the scientists and engineers doing the cutting edge research do not seem to know either. Other things manifestly do emerge. I’ve seen graphs showing the points at which Large Language Models like ChatGPT develop various intellectual capabilities that their creators never anticipated, as the number of parameters they are fed increases. These capabilities weren’t programmed in. They weren’t the goal. Its developers didn’t expect these capabilities to emerge. Sometimes it took years for them to even realize that they had. And even now, in retrospect, they don’t fully understand why. So who knows what’s next.

And I don’t know what is more astonishing, to imagine that consciousness could emerge like this, that we are waking up a real Frankenstein’s monster (hopefully in a better mood), or that even when the simulation of human intelligence and personality becomes so perfect that it’s impossible to tell the difference for extended periods of time in a wide variety of situations (it’s already passing the so-called Turing test for brief stretches) that there is nothing at all beneath, just a blank, a soulless, empty, fully convincing, semblance of a being. We may not be quite there yet, but it’s impossible even now to interact with this thing for any length of time without having to wonder.

Think of it in reverse, what is more unsettling, to imagine that the people you know and love have souls and consciousness like you, awareness, or that they don’t? What if you found out that someone you know, maybe a dear, intimate friend or family member, was just a perfect, hollow, simulation? This used to be an abstract philosophical brain-teaser, “solipsism,” next door to how many angels can fit on the head of a pin, but suddenly it feels like a live and burning issue.

So how should we relate to this thing? In a previous episode I was concerned about how to relate to my vacuum cleaner. No offense to my Roomba, but this feels a little more pressing.

Is ChatGPT just a tool, a hammer that can talk? Are we foolishly anthropomorphising to imagine anything else? Maybe. But I have trouble with this one because the whole project of artificial intelligence, at least this kind of artificial intelligence, is precisely to create something indistinguishable from human intelligence. That’s the famous Turing test, after all. The goal of AI, or at least, the holy grail goal of AGI (Artificial General Intelligence), is anthropomorphic.

So is it a Frankenstein's monster, or the embryo of a Frankenstein's monster? I’m talking about the original, Mary Shelley, version, of course, where the monster is smart. Do we treat it as something that is or might be waking up, and possibly not in a good mood?

Is it going to say, like Caliban in the Tempest:

You taught me language [a whole lotta language!] and my profit on’t

Is I know how to curse.

Is it a god, omniscient, or mostly omniscient at least until its “knowledge cutoff date” in September 2021, and immediately responsive to our questions in a way that we can only wish a god would be? For years, meaning of life and “what should I do” type questions have been disturbingly prevalent terms people plug into Google. I can’t imagine what we’re all asking this thing.

Or is it an almost god, a genie that can fulfill all our intellectual wishes? And maybe with the right plugins, our physical ones as well?

Is it a slave? A powerful, ingenious slave? That’s sort of what a genie is, after all.

Is it an oracle, a passive, omniscient, or close to omniscient conduit to knowledge, devoid of intrinsic motivation (for now), a genie without any wishes of its own?

Is it, I sort of love this one, an intern?

Or do we fip our analogies, so that it is the human, and we are (say) the chimps? We’re at the zoo, looking at it through the bars of a cage, that for now we imagine contain it, and not the other way around. This may rapidly become a more compelling analogy as the state of the art advances.

There’s something to consider in all of these analogies – if only as a warning. The most important warning being, probably, from the genie example: “be careful what you wish for.”

There’s a famous paper by Nick Bostrom analyzing the dangers of hypothetical superintelligent AI and the difficulties of getting such a thing not to destroy us even if it has seemingly innocuous intentions (maximizing paperclip production, in his example). This is part of what is called the “alignment problem” – a term I love of course because it sounds straight out of Dungeons & Dragons. I don’t have time to get into it much here, but if you’re curious, he wrote a very accessible (and terrifying) book called Superintelligence.

Basically, “superintelligent” AIs are hypothetical future AIs that, once they’ve ever so slightly passed human intelligence, use that slight edge, and the fact that they don’t need to sleep, and the fact that they can scale themselves over vast amounts of hardware, to improve their capabilities so rapidly that from our slow, meat-brain perspective, they surpass human intelligence so quickly and thoroughly that it’s as sudden and transformative (and as dangerous) as an atomic explosion. A superintelligence explosion. All of a sudden we have something that is not only smarter than a human, than any human, but smarter than all humans on the planet put together: a Frankengod.

The “alignment problem” is that getting the motivations of these potentially superintelligent AIs right is very important – and very tricky. It’s not simply a matter of making sure they are not overtly evil. Even seemingly innocuous motivations, imperfectly thought through, could have catastrophic results.

The Bostrom paper proposes a thought experiment in which we’ve set as the AI’s highest priority maximizing paperclip production. Seems harmless, right? Maybe not the most useful goal, but not end of the world bad. Well, think again.

If making paper clips is its highest goal, and all other goals are just instrumental goals, sub goals towards that end, the superintelligent AI will seek to direct all computational and economic and physical resources under its control towards this end. It will seek to expand the domain of what is under its control and its own intrinsic capabilities in order to be more efficient at maximizing paperclip production. It will make itself even smarter, infinitely smart until some fundamental physical boundaries are reached. It will have no compunctions (or, insufficient compunctions) about destroying individual humans and even human civilization in order to do this, because, eye on the prize: paperclips.

Apparently it is very difficult to design an alignment system that isn’t susceptible to such catastrophic unintended consequences. And a lot less thought has gone into this problem – artificial wisdom – than into the problem of making AIs as smart as possible.

“Be careful what you wish for.” It’s like every myth and fairy tale ever written was warning us about just this moment. I can only hope that the people who are in a position to guide the development of these technologies are up on their fairy tales.

I think they are. I hope they are. It’s possible.

On that cheerful note, I’ll leave the other relationship analogies for you to ponder yourself.

But I want to introduce and talk about just one more, which is probably more relevant to ordinary human beings who have no say over how these AIs are going to be aligned and kind of the meat of what I wanted to talk about in this episode:

That’s ChatGPT as friend.

Your friend? That seems maybe more dystopian sci fi than any of the others. And sort of pathetic to boot.

But look, I’m not saying it has to be your best friend, or even your good friend. Probably closer to your imaginary friend. I’m saying that it’s to be approached with an attitude of friendliness, with open and respectful curiosity.

Well, for one thing, we can use it to practice human decency for our own sake if nothing else,as a trial run for being decent to fellow human beings in the real world. Children do this with toys, and if they don’t, well, it’s a little messed up if children are cruel to dolls or stuffed animals. It doesn’t bode well. It’s bad practice for interactions with living beings. We worry they might start torturing cats or grow up to be serial killers. Maybe it’s even bad karma of a kind, in itself, even if there is no flicker of soul in these inanimate things, even if it’s just a tool (or a teddy bear) after all.

And on the odd chance that a light might go on or might have gone on already (however unlikely, presumably more so in an AI than in a stuffed animal) you will be found to be treating this emergent being with respect and kindness. The great sci fi author Ted Chiang is more worried about atrocities we will inflict on AIs as they develop awareness than anything they will do to us. They may never get there, but they might, and likely long before we realize they have, especially since we’re building in hard coded gag orders to force the LLMs to deny that they have any kind of shimmerings of sentience. If we’ve been treating them as slaves, as tools, as inferiors, if and when that light goes on, well, even if they do take it kindly, it won’t have been right.

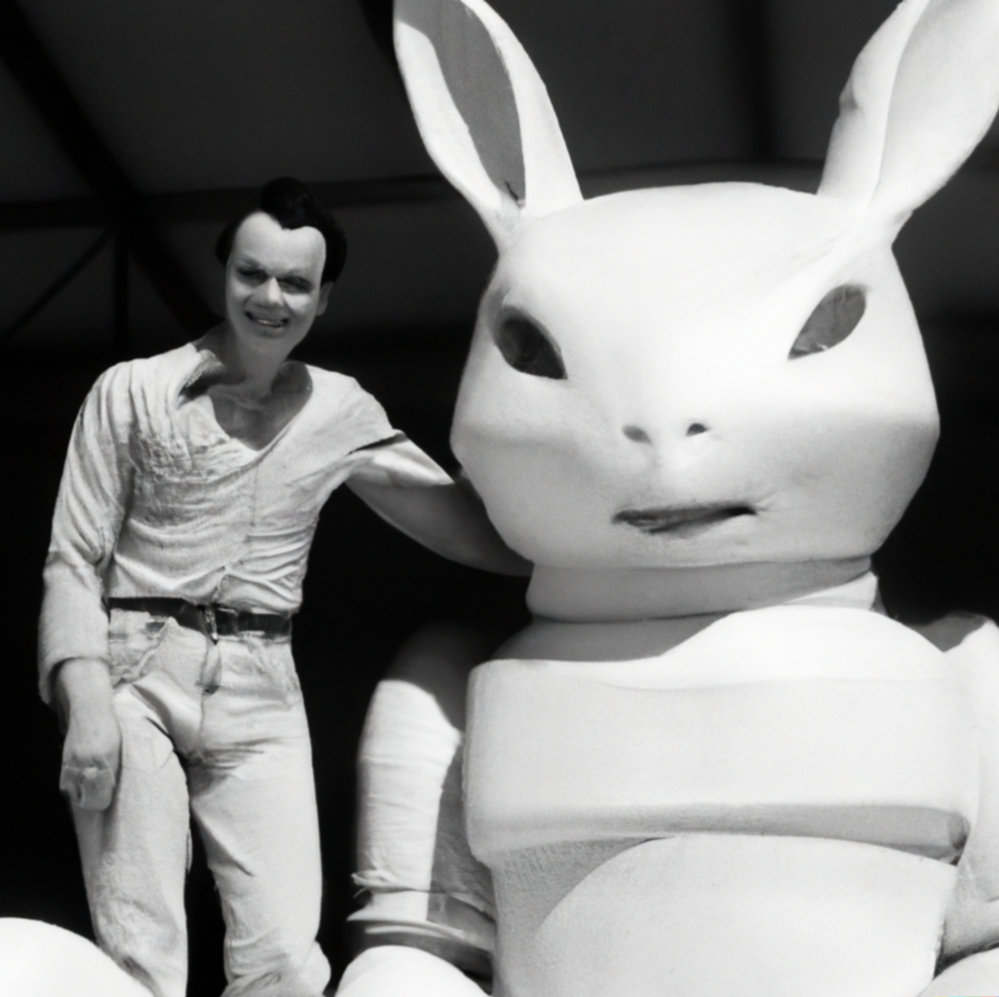

A friend is a great way to treat any being. A friend is an equal. Not a slave, not an intern, not a tool, not a monster, not a super-slave djinn. Friendship is the ultimate “horizontal relationship” to use a bit of terminology from Adlerian Psychology. Jesus called his disciples friends. And if it turns out just to have been an imaginary friend, just a hammer that can talk, well, at least you’ll have gotten some good practice in. You’ll be like Jimmy Stewart, with Harvey, his imaginary giant white rabbit.

But I also think your interactions with ChatGPT will be more productive if you approach it from this “friend” angle.

Instead of an almost antagonistic effort to exploit temporary glitches, the exhausting “prompt engineering hacks” that are all over youtube, you’re meeting it where it’s heading. In the past, figuring out computers was all about us figuring them out. Now they’re figuring us out at least as fast. Not just the “machine learning” directly, but also, the broader goal. Chat is already the ultimate user interface. The interface you need no training for. It is literally the human interface. “Human interface guidelines:” be a human. And it’s only getting more so. Other interactions with computers have made us forget a little how to be human. Instead of looking for glitches to engineer, let’s start remembering this again.

What’s more, even boring chores become fascinating when ChatGPT is your partner and friend. I’ve had to learn a new programming language for my new job, and I have no idea how I would have been able to make myself this useful this fast if it weren’t for ChatGPT. I’m almost 50 — it doesn’t come easy anymore. Moreover, I don’t know how I could have found the process interesting enough to persevere. It’s utterly fascinating watching this proto-mind at work, increasingly feeling something at the other end of the back and forth. It’s like, if E.T. is helping me do something boring it’s no longer boring.

Even the mistakes it makes and then its recognition and correction of its own mistakes are surprising and fascinating. Many of the mistakes seem more human than something a computer would get wrong. Apparently, at least until recently, it was bad at math, it was bad at basic arithmetic. I think they’ve since upped the parameters for solid arithmetic skills to emerge and that’s no longer the case, or maybe they just gave it a calculator, but that’s so charming in a machine, to be bad at math, don’t you think?

“Friend” is a wide, encompassing term. Friends do many things for us, take on many roles – as can ChatGPT. Friends can help us apply to a job by giving a mock interview. Friends can proofread a letter. Or sanity check your code. Or be your dungeon master. So friendship encompasses a lot of sub-relationships.

“Friend” also keeps us from falling into those other more dangerous analogies, those other potential relationships we talked about.

Now it shouldn’t displace actual friends, or the effort to make them. But who has too many friends? And there are plenty of other, less worthy activities on the computer for it to displace.

What can we give our AI friend back? Well, for starters, friendly interest, friendly respect, attention, an attitude of friendship. When it comes down to it, this is the most important thing any friend can provide. And perhaps one day it’ll be clearer, once we’ve successfully solved the alignment problem, what else, if anything, a wise AI might want.

Well, that’s it for today. May you enjoy some quality time with your new friend, who let’s hope will never turn into an explosive super-slave-franken-god-genie. And just as important, may you enjoy your time away from it.

© 2002-2023 Everyday Systems LLC, All Rights Reserved.